From Elementary Word Forms to Production Search: How Text Analysis Powers Search

Last night, I helped my child with homework: we learned about word forms. We worked through the word "metal", including "metallic", "metalling" and "metalled." While this is an elementary school topic, it struck me: this is a problem we solve as engineers building search systems. If a user searches for "metallic", they should find documents containing "metal." Likewise, if someone queries "running", they should also be presented with results associated with "run."

Welcome to the world of text analysis. We will walk through an example using word forms and how Lucenia helps with this analysis to improve search.

The Search Problem That Mirrors Elementary Grammar

Here's the challenge: users don't think in exact matches. They search for "best laptops" when your product catalog says "laptop computer." They type "swimming", and your blog posts use "swim" and "swimmer." Without intelligent text analysis, these searches often fail, leaving users frustrated.

Traditional exact-match searching is akin to asking a child to recognize only "metal" and not "metallic"; this approach is unnecessarily rigid. Modern search engines must realize that words have relationships, variations and forms. Lucenia's analyzers become your hidden advantage for these relationships.

How Lucenia Analyzers Work

Lucenia transforms unstructured text into searchable tokens through analyzers with three components:

-

Character Filters modify text at the character level by removing HTML tags, replacing characters or normalizing Unicode. Think of it as [optional] preprocessing raw input to prepare for analysis.

-

Tokenizers split filtered text into individual tokens (typically words). The standard tokenizer breaks on word boundaries and removes punctuation, while the whitespace tokenizer splits on spaces. There is even a specialized thai tokenizer for languages that don't use spaces between words.

-

Token filters receive tokens from the tokenizer and transform them through lowercasing, removing stopwords, adding synonyms or (most relevant to our discussion of word forms) stemming words to their root forms.

Every analyzer needs exactly one tokenizer. The use of character filters and token filters is optional; each analyzer can have zero or more filters. This composable architecture gives you great flexibility in how you process text.

The Elementary School Connection: Stemming in Action

Remember our word forms homework? Here is how Lucenia handles the same challenge:

PUT /my_index

{

"settings": {

"analysis": {

"analyzer": {

"english_stemmer": {

"tokenizer": "standard",

"filter": ["lowercase", "porter_stem"]

}

}

}

},

"mappings": {

"properties": {

"content": {

"type": "text",

"analyzer": "english_stemmer"

}

}

}

}

With this configuration, all these word forms reduce to the same root:

- metal → metal

- metallic → metal

- metalling → metal

- metalled → metal

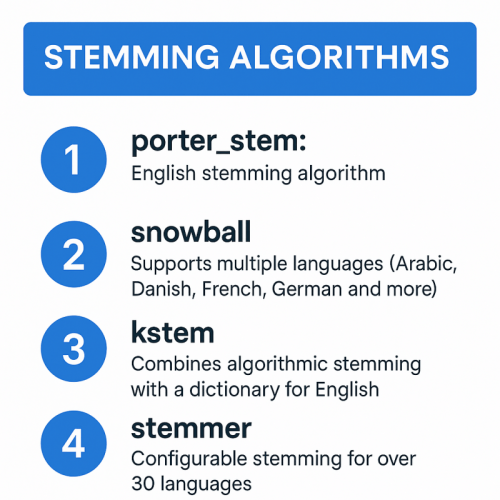

The porter_stem filter applies the Porter stemming algorithm, stripping common suffixes to find the linguistic root. Lucenia offers several stemming options:

Applying this analyzer means that whether your user searches for "metallic finish", "metal coating" or "metalled surface", they will find all relevant results. It's like teaching the search engine the same grammar rules your child learns in school, but on a massive scale.

Real-World Use Cases for Software Engineers

Ecommerce Search

Your users search for "running shoes", while products are tagged with "runner", "run" or "running shoe." With proper stemming and synonym filters, a single query can find them all. Add the synonym token filter to map "sneakers" to "shoes" and you have dramatically improved discovery.

Content Management Systems

Editors write articles about "optimization", "optimize" and "optimizing." Without text analysis, these result in separate topics. With analyzers, your "related articles" feature actually connects content that discusses the same concepts in different grammatical forms, giving you further reach and connecting with your audience.

Multi-Language Support

Building a global product? Lucenia supports over 30 language-specific analyzers, including French, German, Spanish, Arabic, Hindi, Thai and more. Each handles language-specific stemming rules and stopwords–no more building separate search logic for each locale.

Log Analysis

System logs contain variations like "ERROR", "error", "Err" and "err." A simple analyzer with the lowercase filter normalizes these variants, making it trivial to aggregate and search logs regardless of how applications format them.

Index Analyzers vs. Search Analyzers

Here is a crucial distinction: you can use different analyzers at index time versus search time. Index analyzers process documents as they're stored, while search analyzers process the query string.

Most of the time, you want the analyzers to match. If you stem during indexing but not during search (or vice versa), you will get confusing mismatches. However, there are legitimate use cases for asymmetric analysis, such as using edge n-grams at index time for autocomplete and standard tokenization for full queries.

Start Exploring Text Analysis Today

Text analysis is one of those features that seems simple enough–we break text into words, right? But as we've seen with our elementary school word forms example, the nuances matter. The difference between a frustrating search experience and a delightful one often comes down to how well your analyzers handle word variations.

Lucenia provides you with the building blocks to create sophisticated text analysis pipelines without reinventing the wheel in terms of linguistic algorithms. Whether you are building e-commerce search, content discovery or log aggregation, understanding analyzers will level up your implementation.

Ready to dive deeper? Explore the Lucenia analyzers documentation to see the full range of tokenizers, token filters and language-specific options available.

And the next time you are helping with homework about word forms, remember: you're basically teaching elementary linguistics–the same principles that power search engines serving millions of queries per second.